In part one of this series of articles, I wrote about Static Application Security Testing (SAST). In this second part, I turn my attention to Dynamic Application Security Testing (DAST).

Unlike SAST which analyses static application source code, DAST analyses the application dynamically while it is running. Immediately, this pushes the operation of the dynamic analysis tool further right along the delivery pipeline than its static counterpart. Ideally, it needs to do its work after the application code has been integrated and is ready for functional testing. DAST has evolved over the years. When I first came across it, vendors offered a service in which compiled applications were submitted to a cloud-based service, from where skilled cybersecurity teams ran the application using their own analysis tools, and filtered out any false positive findings before returning a report containing a list of vulnerabilities they had discovered. Nowadays, vendors offer fully automated DAST solutions allowing engineers to run the analysis within their CI/CD pipeline.

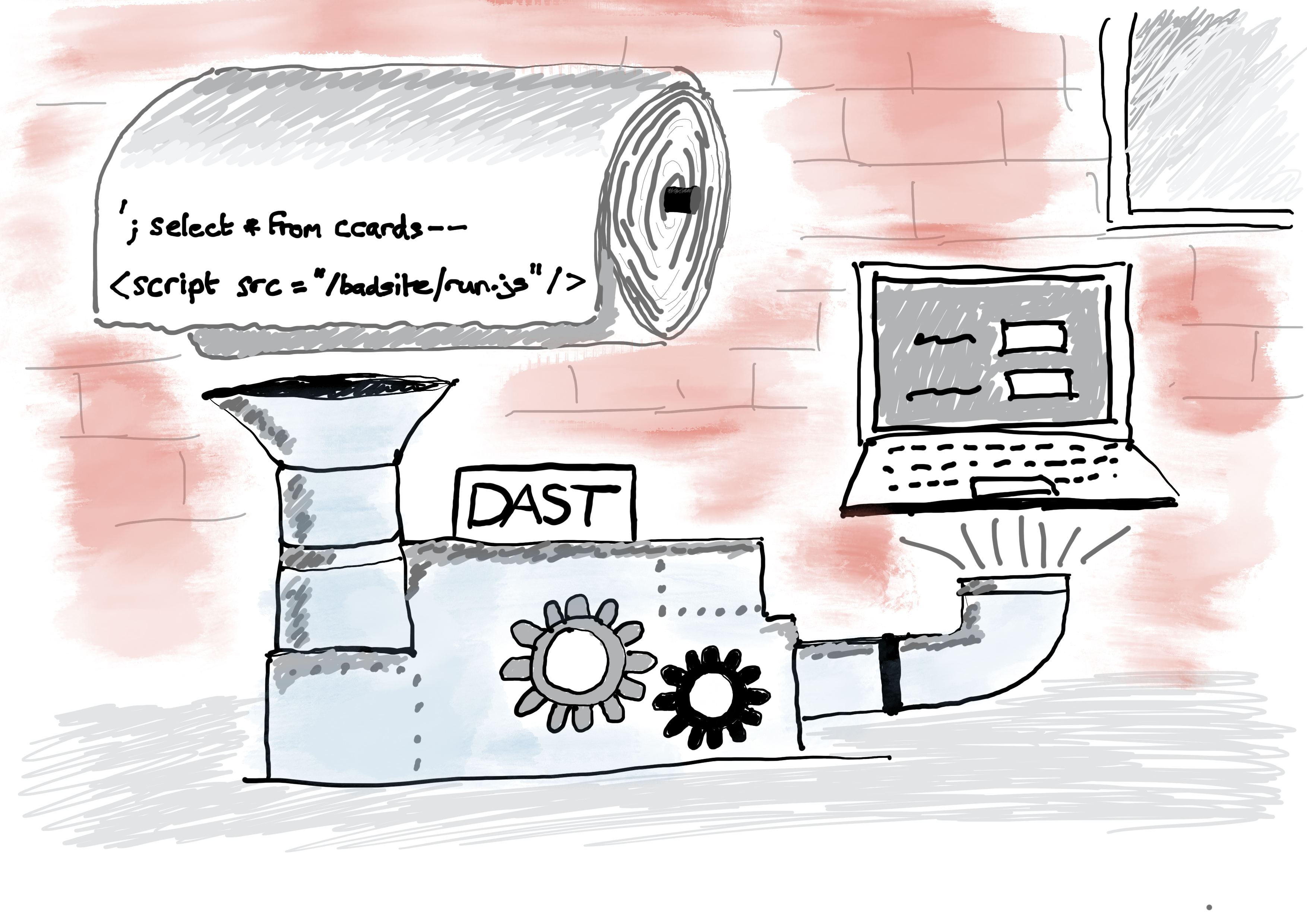

DAST tools requires an element of planning before they can be integrated into the delivery pipeline. They are designed to replay the interactions between users and the normal functionality of an application, but in addition to using data associated with typical usage, they also inject malicious data typical of various security attack scenarios. This is called fuzzing. For example, if the application contains a simple form with two fields that accept two different strings, the DAST tool will correctly populate the one field with a valid string, but populate the second field with a malicious string. If the application sanitises the manipulated value, the test passes, but if the malicious string is not validated or sanitised during the normal running of the application, the test fails.

DAST is designed to replay a whole bunch of different attack scenarios that are typically carried out by hackers. Basically, it is an automated penetration test, using normal functional test scripts as templates to run the same tests over and over albeit with slightly different malicious payloads. Traditionally, manual penetration tests are carried out by highly skilled security teams, often on environments that are as close to production as possible; sometimes, they are carried out on live environments. Unfortunately, due to the cost and timescales involved, such manual penetration tests are carried out late in the delivery lifecycle and often only as tick-box exercise. Automated pen tests can be carried out much earlier and multiple times in the software delivery lifecycle without affecting project timescales. They also provide earlier feedback than manual tests allowing engineers to fix problems much quicker than with manual penetration tests.

Dynamic code analysis does have its weaknesses. In particular, it requires considerable effort to configure and set up a suite of automated functional tests. For example, test engineers would need to record their manual functional tests using products like Selenium and storing them as automated test suites. Once automated functional tests are available, engineers integrate them with DAST tools in order to run them multiple times. Each individual test generates a large number of penetration tests representing different attack scenarios. Often, the repetitive nature of the tests means they need further refinement to ensure that they run in isolation to prevent data clashes and session issues.

This leads to the second weakness: DAST can take a very long time to run before results are returned. Therefore, dynamic analysis is better suited to an asynchronous model of automated testing in which tests are normally run overnight. A further weakness of DAST which I have already touched on above, is the potential for corrupting test data, which could cause the analysis to fail after a period of time. The obvious solution is to mock the data storage systems and supporting functions so that data is never stored and to create unique sessions for each test. This can be difficult and time-consuming to set up as well as maintain. However, with virtualisation being more accessible to organisations, test environments can be spun up easily and quickly to run DAST without impacting on other test environments. However some customer journeys are just not possible to complete when fuzzing data irrespective of the way DAST is configured.

This leads me on nicely to the topic of code coverage. Application code coverage of DAST is only as good as the coverage of automated functional tests used by DAST. Therefore, if you only cover half the application’s functionality, you may be exposing your application to risks in the half that is not covered. Although, in some cases, not all functionality is accessible within your application, so covering these parts of the application with DAST may not be an issue. On the other hand, if you have functionality available to your customers that isn’t regularly tested through DAST, you risk missing vital weaknesses in your application.

One clear advantage of using DAST in your delivery pipeline is its proximity to real life attack vectors. Automating the way that hackers try to manipulate your applications provides an effective way to validate your products against a the most common security threats. Unlike SAST, DAST is much more accurate, producing a much lower ratio of false positives because it identifies exploitable weaknesses within the application. Yet the complexity in setting up DAST, the length of time to run scans and the potential issues with data configuration limiting code coverage can be a hindrance to its successful implementation. But if you can overcome these hurdles, DAST is a great supplement to other testing tools such as SAST covered in part one and IAST covered in part three.

In the next edition of the security test automation series, I will take a closer look at Interactive Application Security Testing (IAST).